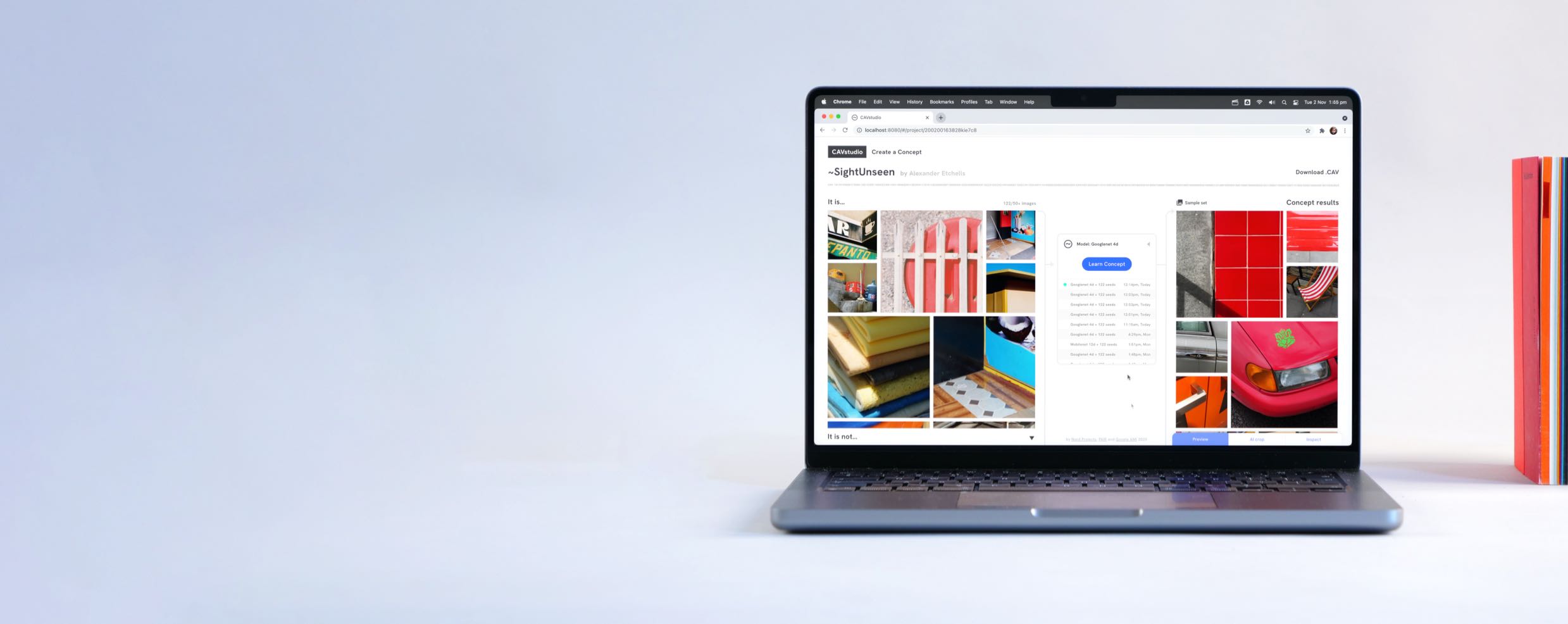

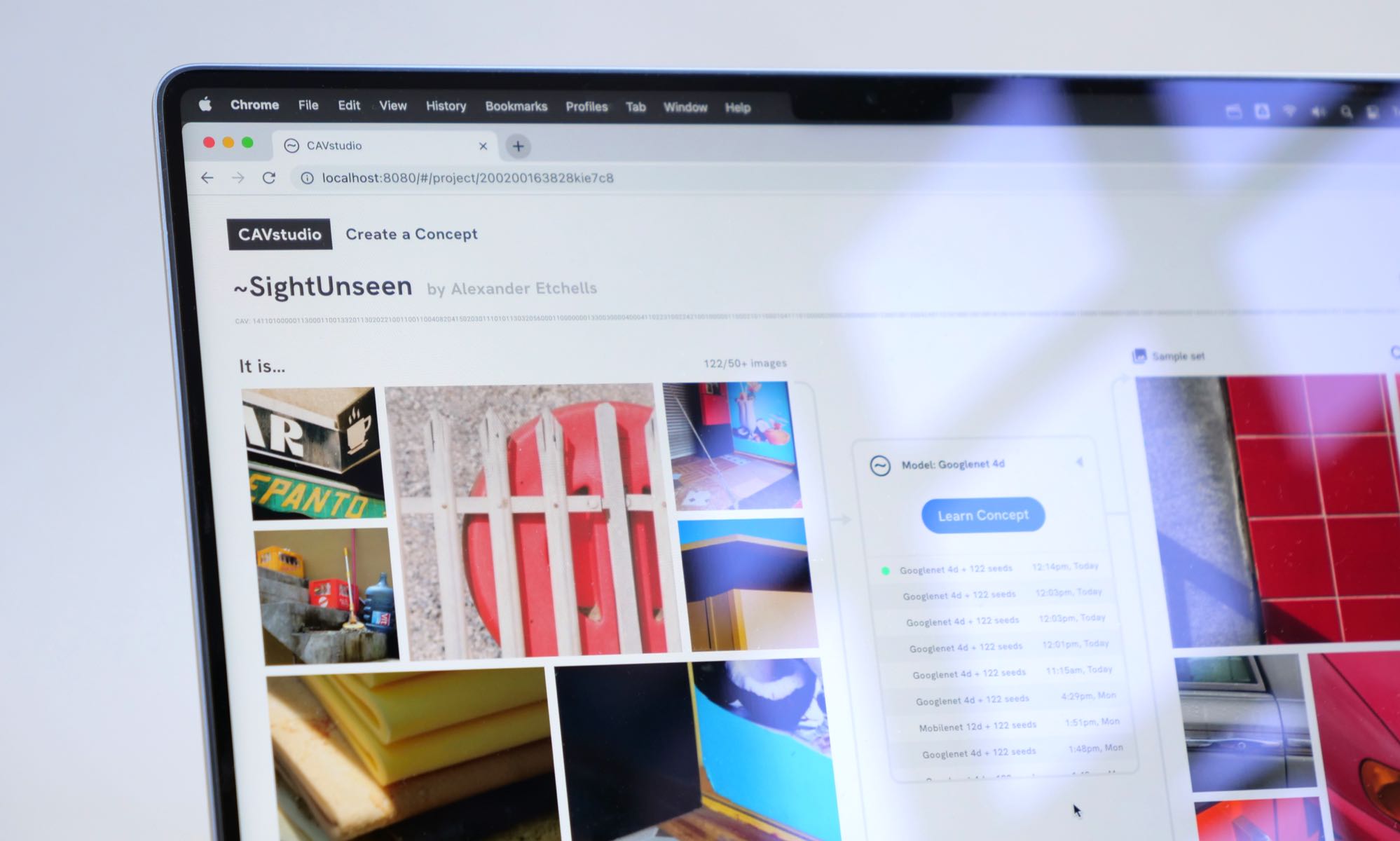

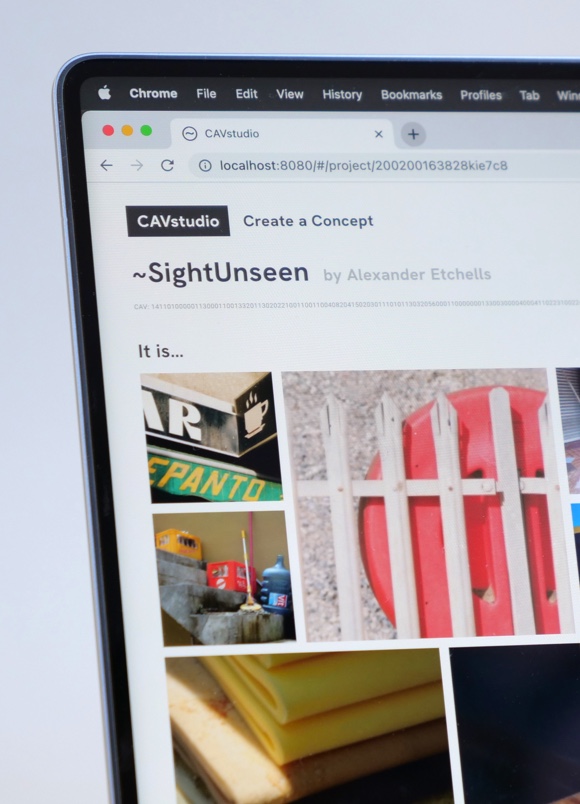

CAVstudio: a visual tool for training AIs

on subjective concepts

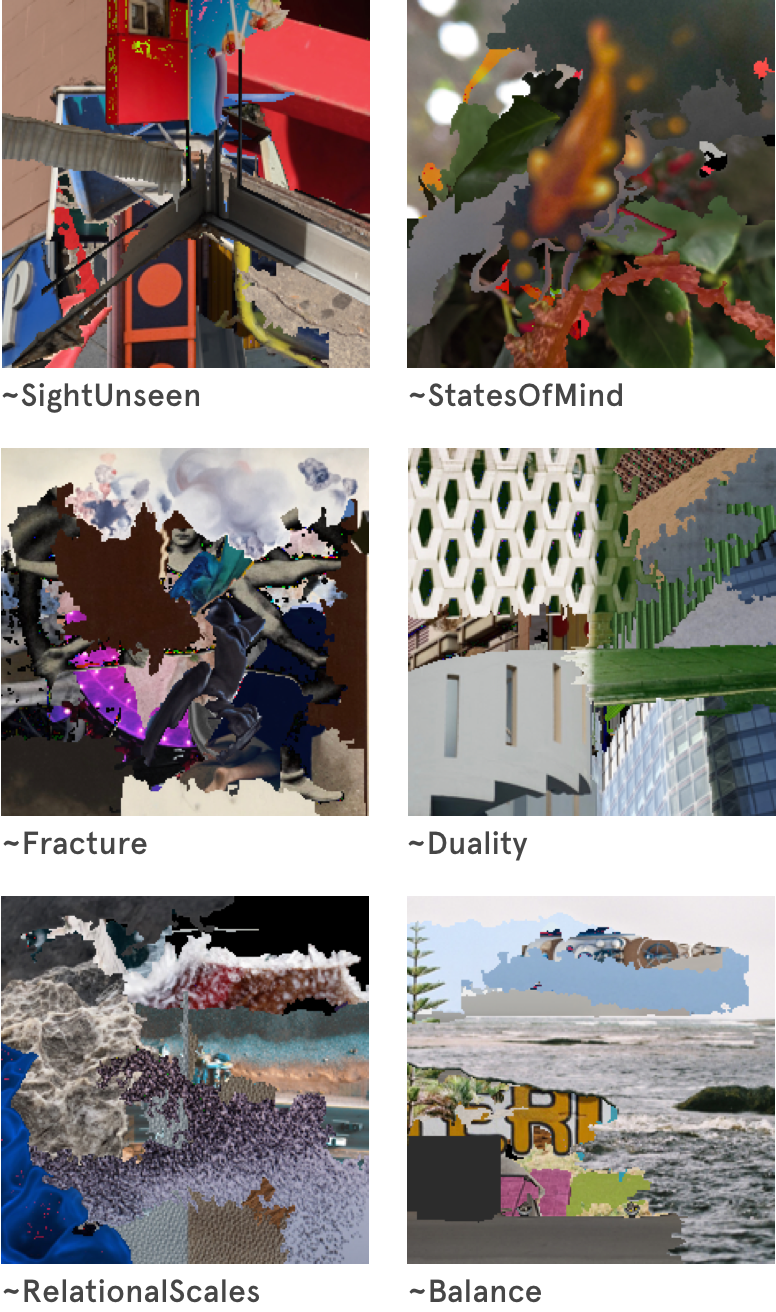

Using Concept Activation Vectors to power

more nuanced, visual search.

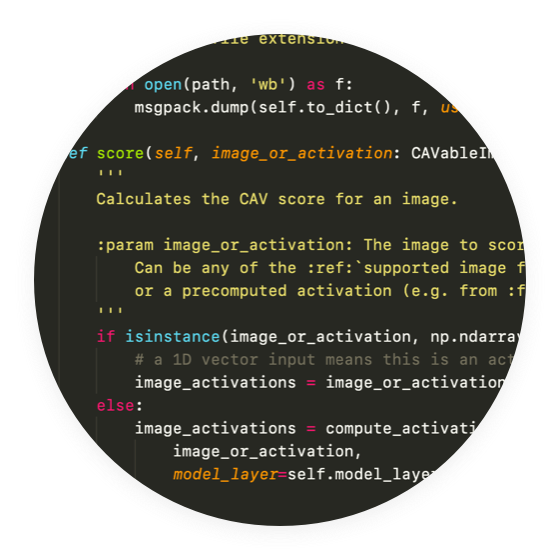

Material exploration with Concept Activation Vectors, interaction

design-led R&D, advanced prototyping, on-device ML.