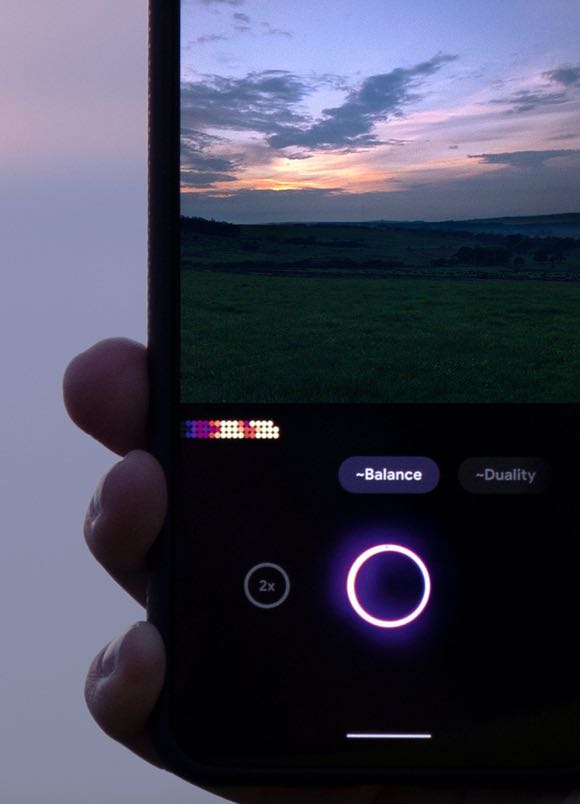

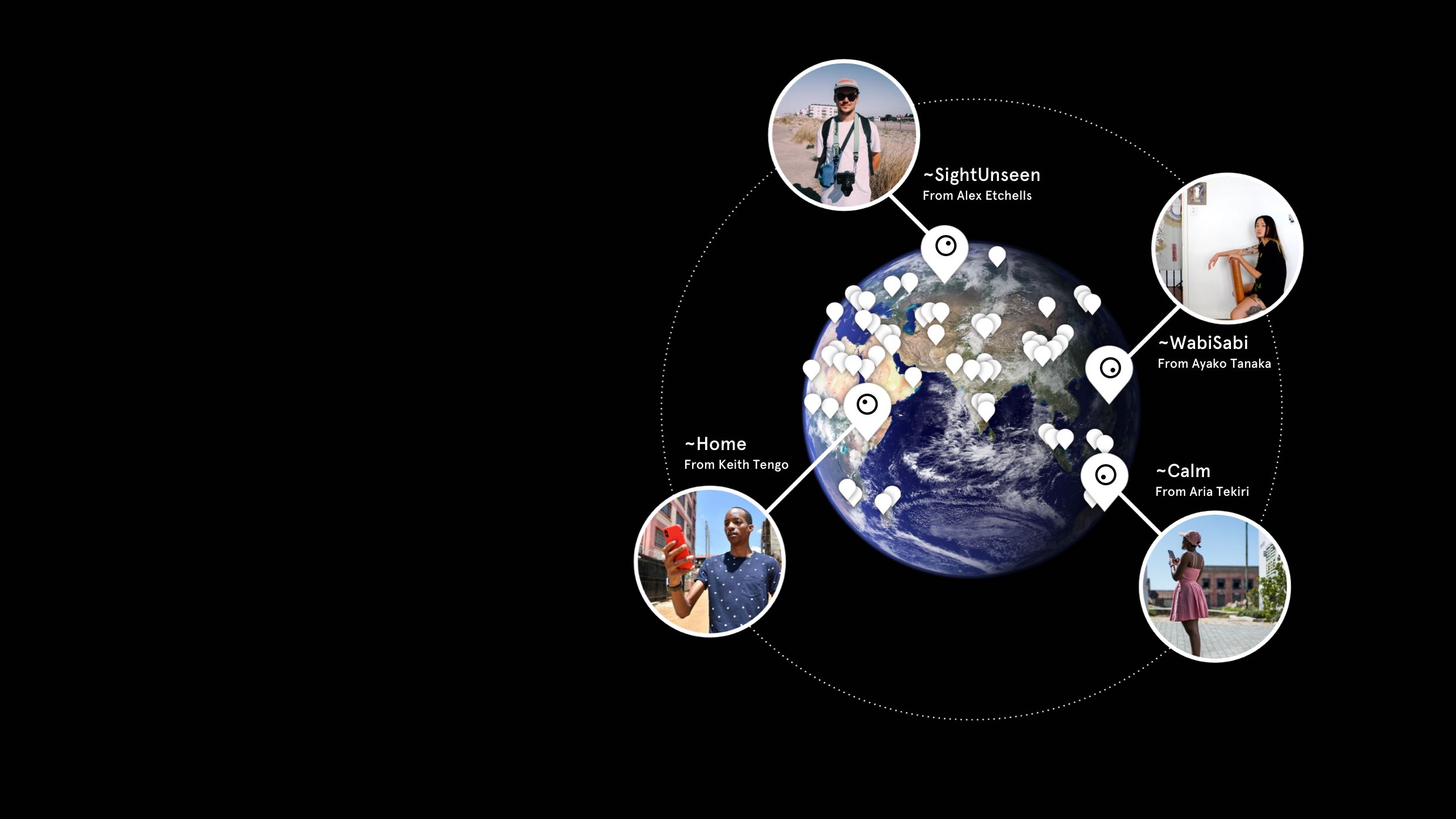

Camera as a shared Human:AI control surface

By placing the AI directly in the shutter button, it can point or signal when it might be good to take a photo: using colour and dynamism to express the CAV-score of the current composition.

We tried to make the shutter feel expressive without influencing the user too much. Here, the user is still in control, with the AI as co-pilot.

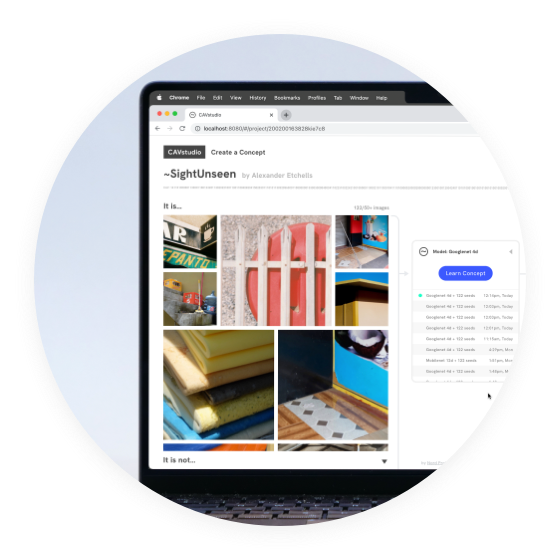

Transparency and control

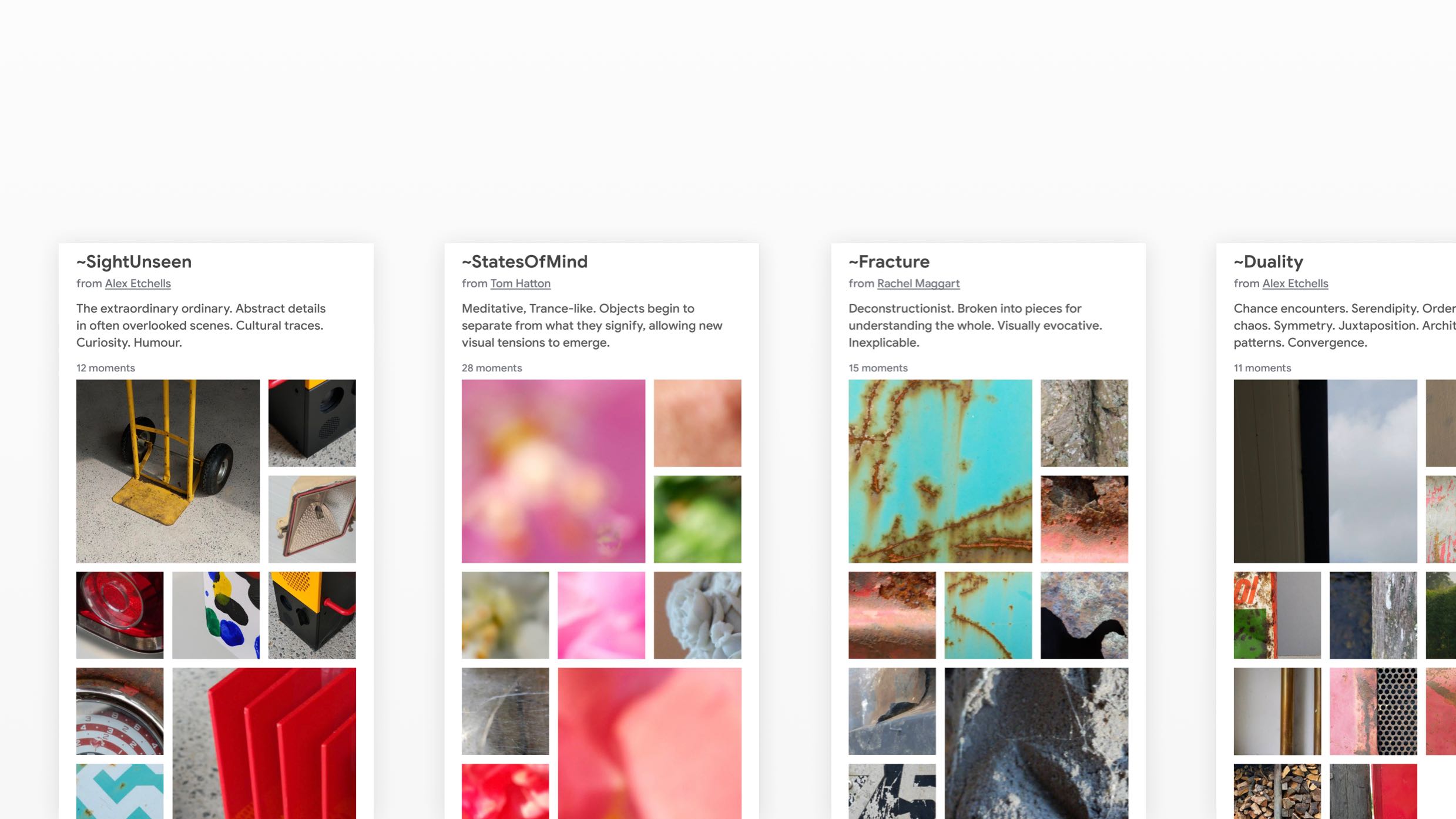

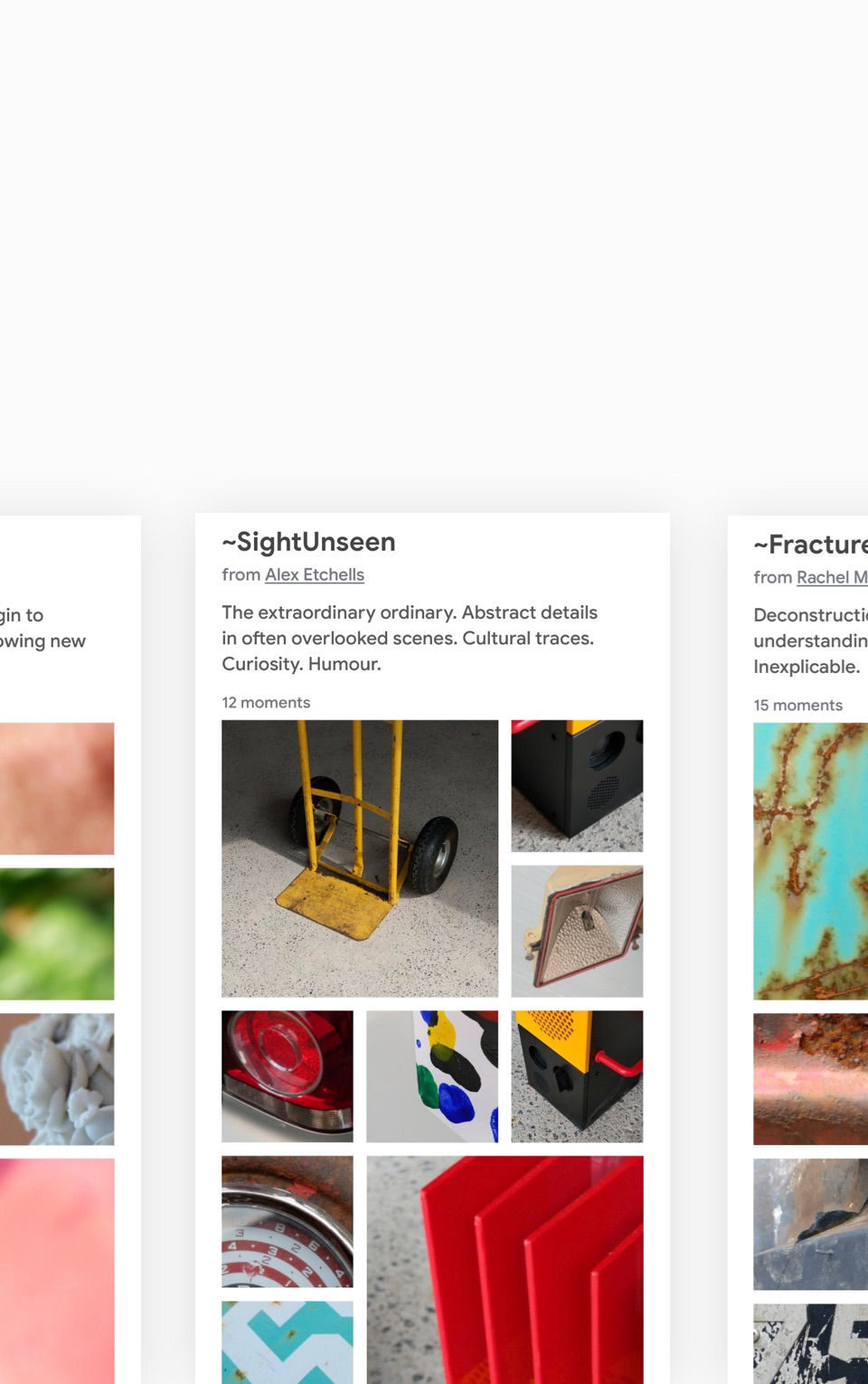

We use the CAV-score to decide which images to shortlist. Results are split into ‘close’, ‘related’, or ‘distant’, implying qualitative judgement, while leaving room for human taste to reinterpret.

The AI auto-crops images to optimise compositions against the concept. This can feel magical, revealing high-scoring parts of an image that might not have been your intended focus. Or, you can always revert back to the original if you prefer.

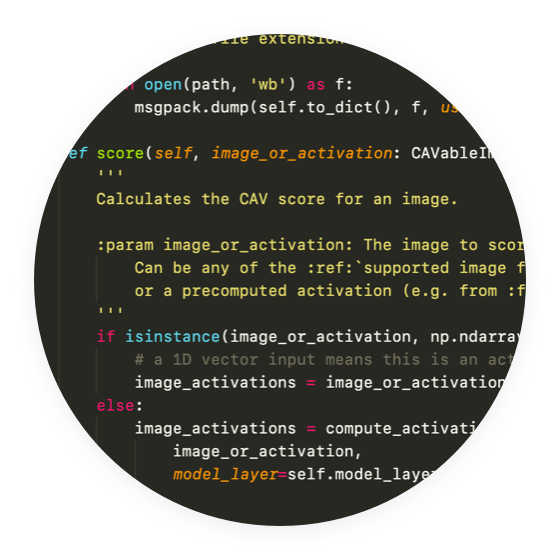

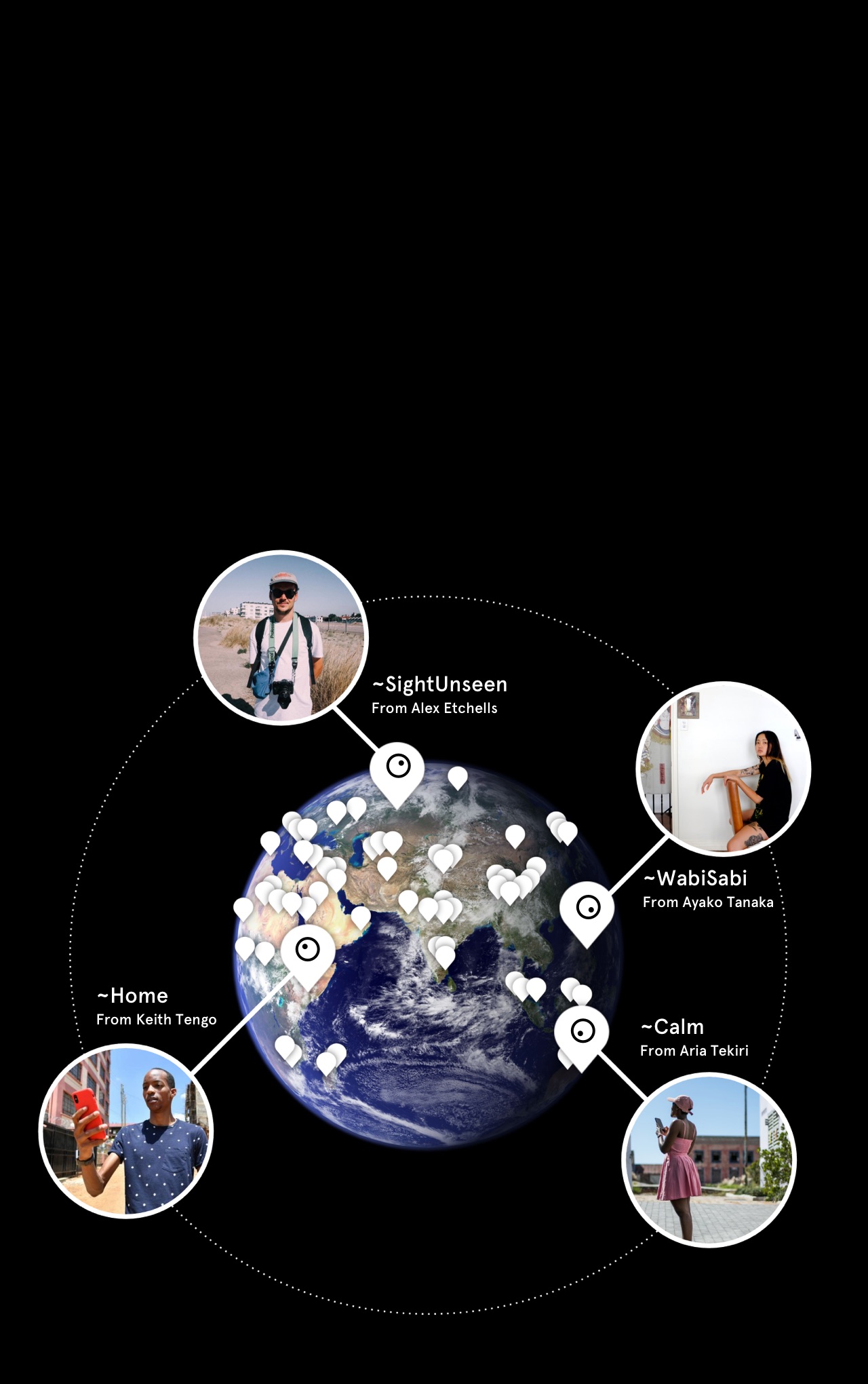

On-device personalisation

Take an existing concept and use it as a starting point to add your own unique perspective. ‘Personalise’ provides gradiented controls to score images you’ve taken from best to worst, and the AI then re-learns the concept using your positive and negative direction to skew the model to better match your own interpretation of a concept.

All processed on-device so what’s personal to you stays that way.

Tom

Tom